Welcome! 🙌 This week, we explored yet another domain where Large Language Models made impact. MarioGPT is the first text-to-level machine learning model, recently published by Shyam Sudhakaran and his colleagues at the IT University of Copenhagen. (yes, self promo everywhere, sorry… 😏) The paper has gained a lot of attention worldwide, receiving hundreds of ⭐ on GitHub in just a few days. Below, you can see the model generating the level column by column. (credit to Shayam for the gif 👐) How cool is that? 🤩

The use of AI in games to automatically generate content is not a new concept. Generating engaging levels that correspond to the game mechanics, while still being playable, has been a challenge in the field of Procedural Content Generation (PCG) for several decades. Traditional PCG methods date back to the 1980s, when Rogue like games used PCG to create dungeons (area where player encounters enemies) in ASCII and define rooms, hallways, monsters, and treasure to challenge the player. (see the figure below) From early days to recent advances in machine learning, PCG has come a long way. MarioGPT combines large language models with novelty search to generate diverse and functional game levels. It excels at generating engaging levels and predicting agent paths, setting it apart from other algorithms. Enjoy the read! 👇

🍄 MarioGPT

📍 Background. The role of procedural content generation (PCG) in game development continues to increase every year due to the global growth of the gaming community. Additionally, the expectations for studios to produce engaging games are higher than ever before. As a result, manual game content generation has become costly, time-consuming, and simply does not scale with current demands. Due to the increasing relevance and advances in machine learning, there have been increased efforts to incorporate deep learning into game level generation. For instance, Generative Adversarial Networks (GANs) have become quite popular due to their ability to create image-like creative content. However, these methods give game level designers only limited control over the generation procedure. In summary, providing a model with a simple text description that generates an engaging and playable game level that conforms to the game mechanics would be a significant step forward in the field of PCG. Let’s dive into how Shyam and his team achieved this!

📑 Learning Objectives. The model needs to learn two important things. First, it needs to understand the different tile types and how they are typically placed. Second, it needs to keep players engaged by generating levels with varying paths (also known as diversity). Additionally, the model should be able to understand text prompts from the user.

🎮 Game understanding. Shyam Sudhakaran et al. used a distilled (reduced) version of GPT2 (Decoder only Transformer) to give the model an understanding of the game. They fine-tuned it on the publicly available video game levels data (VGLC) dataset for Super Mario Bros. The entire inference pipeline is shown in the figure below.

To begin with, the text prompt is tokenized and then mapped to dense embeddings via the Large Language Model (LLM) called BART. It is important to note that this model is frozen, meaning its weights are not trainable. The embeddings of the tokens are averaged into a single embedding and used as the query in the cross-attention layer.

Next, a game level is randomly selected and its previous 50 columns, represented as a string of characters, are tokenized into discrete values (such as 2, 14, etc.) using a Byte Pair Encoding tokenizer. These values are concatenated into a single vector and fed into the GPT layers. The output of these layers is then used as the keys and values in the cross-attention layer, which produces a vector with a probability distribution over each possible next token. The objective of the model is to produce a level that closely matches the randomly selected one. This concludes the fine-tuning process. 👌

💯 Diverse and Engaging Levels. After MarioGPT has gained some understanding of the Mario game, the goal is to teach it how to generate new and creative levels that keep the player engaged. To achieve this, Shyam Sudhakaran et al. use a technique called Novelty Search, as shown in the figure below.

First, an archive with novel levels is created by selecting some initial levels. Next, a random level is sampled from the archive and mutated using the following steps:

- (sampling) A random slice of 40-80 columns is selected from the level.

- (mutation) The MarioGPT transforms the slice guided by a random textual prompt.

- (in-painting) Since MarioGPT is a unidirectional model and does not consider future tokens, the transformed slice is connected with the remaining original level using bidirectional MarioBert (a Bert like model) to ensure that the solution path remains consistent.

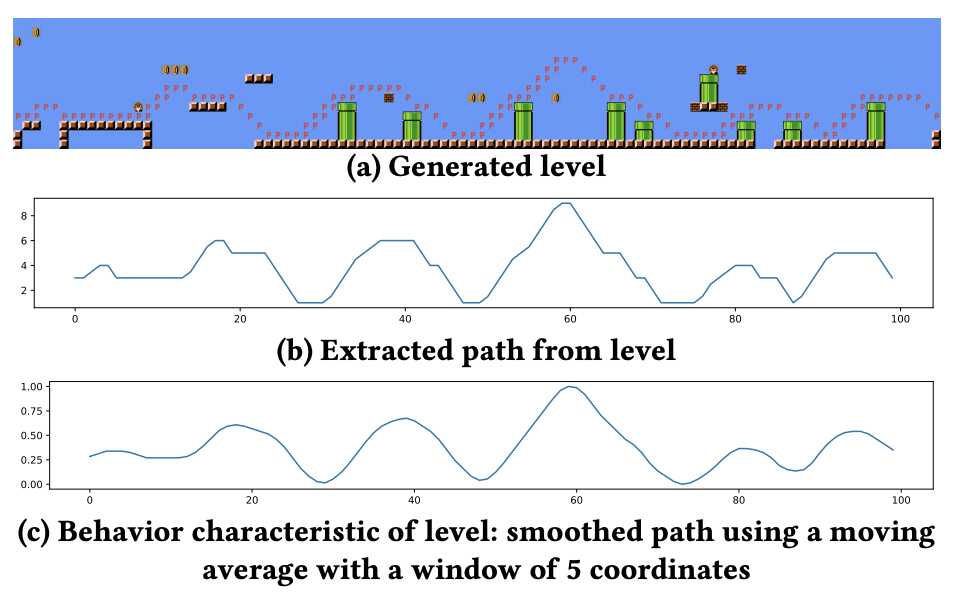

- (evaluate) The newly produced level and the coordinates of its solution path are normalised, and their average is calculated. (see the figure below) The resulting vector representing the normalised average path coordinates is compared to the vectors of the K-closest levels from the archive. The comparison is done by calculating the average distance between the coordinate vectors of levels. If this distance exceeds a certain threshold, the level is considered novel enough to be added to the archive of novel levels.

This process is repeated N times. And if things go well, you get a model that can do this (to remind you how cool MarioGPT actually is):

🔮 Key Takeaways

😍 LLM for level generation. Using large language models like GPT-2 to generate game levels might seem unrealistic at first. However MarioGPT proved that it is in fact a promising approach to procedural content generation since it can produce diverse and playable (88 % of the time) game levels.

🎮 Evaluating playability. While generating images from a textual prompt is challenging, generating game environment adds another layer of complexity since it must be functional. Many previous methods use AI agents to evaluate the playability which is resource intensive at best. In contrast, MarioGPT takes different approach:

MarioGPT has an advantage compared to these algorithms because it can not only generate diverse levels, but also predict accurate paths that an agent would take in the level. This ability, when combined with a diversity-driven objective, results in a computationally efficient method of generating diverse and playable levels

🦾 Generative AI powered by Transformers. Whether you want to generate audio or game level from text prompt, it seems like Attention is All You Need. The Transformers architecture is omnipresent and it seems like it is going to stay this way for some time.

💰 Better startup valuation. (Random) takeway at the end, if your startup is struggling to raise money or the valuation is not as you would like, perhaps try to add Generative AI to your pitchdeck. 😉

📣 Stay in touch

That’s it for this week. We hope you enjoyed reading this post. 😊 To stay updated about our activities, make sure you give us a follow on LinkedIn and Subscribe to our Newsletter. Any questions or ideas for talks, collaboration, etc.? Drop us a message at hello@aitu.group.